Understanding Zeppelin Docker Parameters

There are a set of key parameters to use when running Apache Zeppelin containers. This includes parameters related to the connection port, bridge networking, specifying your MapR Data Platform cluster, enabling security through MapR ticketing, and enabling the MapR FUSE-Based POSIX Client.

docker run -it -p 9995:9995 \

-e HOST_IP=<docker-host-ip> \

-p 10000-10010:10000-10010 \

-p 11000-11010:11000-11010 \

-e MAPR_CLUSTER=<cluster-name> \

-e MAPR_CLDB_HOSTS=<cldb-ip-list> \

-e MAPR_CONTAINER_USER=<user-name> \

-e MAPR_CONTAINER_PASSWORD=<password> \

-e MAPR_CONTAINER_GROUP=<group-name> \

-e MAPR_CONTAINER_UID=<uid> \

-e MAPR_CONTAINER_GID=<gid> \

-e MAPR_TICKETFILE_LOCATION=<ticket-file-container-location> \

-v <ticket-file-host-location>:<ticket-file-container-location>:ro \

-e MAPR_MOUNT_PATH=<path-to-fuse-mount-point> \

--cap-add SYS_ADMIN \

--cap-add SYS_RESOURCE \

--device /dev/fuse \

--security-opt apparmor:unconfined \

-e MAPR_HS_HOST=<historyserver-ip> \

-e ZEPPELIN_NOTEBOOK_DIR=<path-for-notebook-storage> \

-e MAPR_TZ=<time-zone> \

maprtech/data-science-refinery:v1.4.1_6.1.0_6.3.0_centos7docker run -it -p 9995:9995 \

-e HOST_IP=172.24.9.151 \

-p 10000-10010:10000-10010 \

-p 11000-11010:11000-11010 \

-e MAPR_CLUSTER=my.cluster.com \

-e MAPR_CLDB_HOSTS=172.24.11.84,172.24.8.72,172.24.9.248 \

-e MAPR_CONTAINER_USER=mapuser1 \

-e MAPR_CONTAINER_PASSWORD=SeCreTpAsSw0 \

-e MAPR_CONTAINER_GROUP=mapr \

-e MAPR_CONTAINER_UID=5000 \

-e MAPR_CONTAINER_GID=5000 \

-e MAPR_TICKETFILE_LOCATION=/tmp/mapr_ticket \

-v /home/mapruser1/mapr_ticket:/tmp/mapr_ticket:ro \

-e MAPR_MOUNT_PATH=/mapr \

--cap-add SYS_ADMIN \

--cap-add SYS_RESOURCE \

--device /dev/fuse \

--security-opt apparmor:unconfined \

-e MAPR_HS_HOST=172.24.9.248 \

-e ZEPPELIN_NOTEBOOK_DIR=/mapr/my.cluster.com/user/mapruser1/notebook \

-e MAPR_TZ=US/Pacific \

maprtech/data-science-refinery:v1.4.1_6.1.0_6.3.0_centos7The following sections describe each category of parameters in more detail. Where appropriate, the descriptions reference the sample command.

For a list of all MapR-specific environment variables, refer to the MapR-Specific Environment Variables section at the end of this topic.

Connection Port

ZEPPELIN_SSL_PORT environment variable in your docker

run command and specify the

<port-number>:docker run -it \

...

-e ZEPPELIN_SSL_PORT=<port-number> \

-p <port-number>:<port-number> \

...-p <port-number>:<port-number> in your docker

run command. Bridge Networking

By default, Docker uses bridge networking. In general, bridge networking provides better isolation from the host machine and other containers.

HOST_IP environment variable, the -p

10000-10010:10000-10010 and -p 11000-11010:11000-11010

parameters when using bridge

networking:docker run -it \

...

-e HOST_IP=<docker-host-ip> \

-p 10000-10010:10000-10010 \

-p 11000-11010:11000-11010 \

...The <docker-host-ip> must be an actual IP address. If you are running

the container on your laptop, you cannot specify localhost as the IP

address.

Specifying the 10000-10010 port range reserves the range for the Livy launcher. If you are

already using these ports for other reasons, use the LIVY_RSC_PORT_RANGE

environment variable to specify a different range.

If you plan to use Spark interpreter, you must reserve the 11000-11010 port range for

Spark. To reserve a different port range, use the SPARK_PORT_RANGE

environment variable.

docker run -it \

...

-e HOST_IP=<docker-host-ip> \

-p 10011-10021:10011-10021 \

-e LIVY_RSC_PORT_RANGE="10011~10021" \

-p 13011-13021:13011-13021 \

-e SPARK_PORT_RANGE="13011~13021" \

...LIVY_RSC_PORT_RANGE and SPARK_PORT_RANGE environment

variables.docker run command

instead:docker run -it \

...

--network=host \

-e HOST_IP=<docker-host-ip> \

...See https://docs.docker.com/engine/userguide/networking/ for more details about Docker networking.

MapR Clusters

docker run -it \

...

-e MAPR_CLUSTER=my.cluster.com \

-e MAPR_CLDB_HOSTS=172.24.11.84,172.24.8.72,172.24.9.248 \

...MapR Ticketing

If your MapR cluster is secure, you need a copy of

the MapR ticket on your local host so you can specify

a mount point in your docker run command. This makes the ticket visible to

the Zeppelin container. The sample command shown earlier uses MapR tickets.

To determine whether your cluster is secure, view the contents of the file

/opt/mapr/conf/mapr-clusters.conf on your MapR cluster. For example, the following shows a secure

cluster:

my.cluster.com secure=true ip-172-24-11-84If your cluster is secure, follow these steps to make the ticket visible to the Zeppelin container:

- Generate a service ticket for the container user by following the instructions at Generating a Service Ticket.

- Copy the generated ticket file to your local host machine. This is your source ticket file.

- Change the owner and group on your source ticket so it matches the UID and GID in the ticket file.

- Specify the source ticket path in the Docker mount point, as described in the table below.

The table lists the parameters related to MapR tickets and their values in the sample command:

| Parameter | Sample Parameter Value | Details |

|---|---|---|

MAPR_CONTAINER_USER |

mapruser1 |

The only user who can access the notebook |

MAPR_CONTAINER_PASSWORD |

SeCreTpAsSw0 |

The password you use to log in to your Zeppelin notebook. This password does

not need to match the password in your MapR

cluster. If not specified, it defaults to the value of

MAPR_CONTAINER_USER. |

MAPR_CONTAINER_GROUP |

mapr |

Name of the container user's group |

MAPR_CONTAINER_UID |

5000 | UID of the container user; must be consistent with the value in the ticket file |

MAPR_CONTAINER_GID |

5000 | GID of the container user; must be consistent with the value in the ticket file |

MAPR_TICKETFILE_LOCATION |

/tmp/mapr_ticket |

Location of the ticket file in the container |

-v

<ticket-file-host-location>:<ticket-file-container-location>:ro |

-v /home/mapruser1/mapr_ticket:/tmp/mapr_ticket:ro

|

Docker mount point for the source and destination of your ticket

file<ticket-file-location>:

<ticket-file-container-location>:

|

See Security Considerations for the MapR PACC for further information.

FUSE-Based POSIX Client

With the FUSE POSIX Client for File-Based Applications, you can access the MapR File System using POSIX shell commands

instead of Hadoop commands. To do so, you must specify the MapR File System mount point environment variable

(MAPR_MOUNT_PATH) and other FUSE parameters in your docker

run command. In the sample command shown earlier, the following are the relevant

parameters and their settings:

| Parameter | Sample Parameter Value |

|---|---|

MAPR_MOUNT_PATH |

/mapr |

--cap-add |

SYS_ADMIN |

--cap-add |

SYS_RESOURCE |

--device |

/dev/fuse |

--security-opt |

apparmor:unconfined |

All of these parameters are required except security-opt. You must

specify security-opt if you are running on an Ubuntu host.

/opt/mapr/logs/posix-client-container.log inside your container.Pig, Livy, and Spark Interpreters

MAPR_HS_HOST environment variable to the IP address of your MapR cluster's

HistoryServer:docker run -it ... -e MAPR_HS_HOST=172.24.9.248 ...This enhances the performance of those interpreters. If your MapR cluster does not have a HistoryServer, your Pig and Spark jobs will run, but they may perform poorly.

Notebook Storage

The environment variable ZEPPELIN_NOTEBOOK_DIR specifies where to store

your notebooks. If you do not specify ZEPPELIN_NOTEBOOK_DIR, Zeppelin

stores your notebooks in the directory /opt/mapr/zeppelin/zeppelin-0.8.0/notebook.

Storage Options

You can store your notebooks either in a MapR File System or your container's local filesystem:

- MapR File System using the FUSE-based POSIX client

-

In the sample command shown earlier, MapR Data Science Refinery stores your notebooks in a directory named

/user/mapruser1/notebookin the MapR File System using the FUSE-Based POSIX Client.The example assumes your MapR filesystem mount point is

/maprand your cluster name ismy.cluster.com:docker run -it ... \ -e ZEPPELIN_NOTEBOOK_DIR=/mapr/my.cluster.com/user/mapruser1/notebook ...IMPORTANT You must specify the parameters used by the FUSE-Based POSIX Client. If Docker is unable to start the MapR FUSE-Based POSIX Client, you cannot open Zeppelin in your browser. Your browser will return the following error:HTTP ERROR: 503 - MapR File System without the FUSE-based POSIX client

-

Starting in MapR Data Science Refinery 1.3, you can store your notebooks in the MapR File System without using the MapR FUSE-Based POSIX Client. To use this option, specify the full MapR File System path in the

ZEPPLIN_NOTEBOOK_DIRvariable.The following example is equivalent to the previous:

docker run -it ... \ -e ZEPPELIN_NOTEBOOK_DIR=maprfs:///user/mapruser1/notebook ... - Local filesystem

-

To store your notebooks in your local filesystem, specify the container's local path in

ZEPPELIN_NOTEBOOK_DIR:docker run -it ... \ -e ZEPPELIN_NOTEBOOK_DIR=/opt/mapr/notebook ...

Zeppelin Tutorial Notebook

If you set ZEPPELIN_NOTEBOOK_DIR, perform the following steps to enable

access to the tutorial:

- Manually move the tutorial notebook from the default directory to your specified

notebook directory.

The following command to move the tutorial from the default location to the MapR File System path should be run from inside the container running Zeppelin:

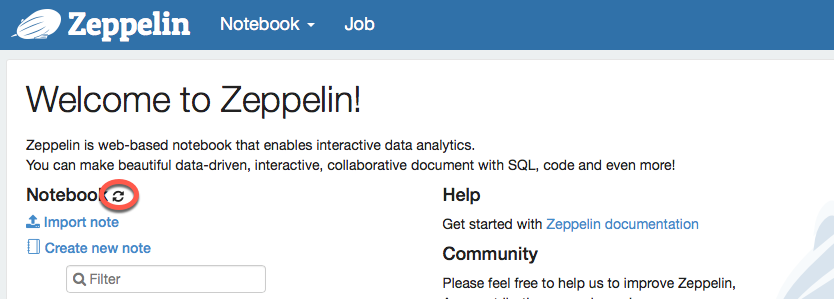

cp -r /opt/mapr/zeppelin/zeppelin-0.8.0/notebook/* /mapr/my.cluster.com/user/mapruser1/notebook/hadoop fs -put /opt/mapr/zeppelin/zeppelin-0.8.0/notebook/* maprfs:///user/mapruser1/notebook/ - After moving the notebook, make sure you reload your notebooks from storage by

clicking on the icon circled in red below:

Python Version

By default, when you use Python with either the Livy or Spark interpreters, the

interpreters use Python 2. Although you can run only one version of Python with MapR Data Science Refinery, you can switch to Python 3, by setting the

PYSPARK_PYTHON environment variable to the absolute path of the Python 3

executable on your MapR cluster:

docker run -it ... -e PYSPARK_PYTHON=/usr/local/bin/python3.6 ...You can also install custom Python packages. See Installing Custom Packages for PySpark

Idle Interpreter Timeout Threshold

Starting with the 1.3 release, by default, MapR Data Science Refinery

terminates interpreters that have been idle for one hour. To modify this idle timeout

threshold, specify the

ZEPPELIN_INTERPRETER_LIFECYCLE_MANAGER_TIMEOUT_THRESHOLD environment

variable. The parameter value is in milliseconds.

The following example sets the idle timeout to 10 minutes:

docker run -it ... \

-e ZEPPELIN_INTERPRETER_LIFECYCLE_MANAGER_TIMEOUT_THRESHOLD=600000 ...If a Spark job terminates due to the Spark interpreter reaching the timeout threshold, the

job shows a status of FAILED in the YARN UI.

Configuration Storage

By default, the following Zeppelin configuration files are stored in

/opt/mapr/zeppelin/zeppelin-0.8.0/conf/:

interpreter.jsonnotebook-authorization.json

Starting with MapR Data Science Refinery 1.3, you can store these files in the

MapR File System by specifying the

ZEPPELIN_CONFIG_FS_DIR environment variable. You can also specify a local

system for this variable.

The following shows sample commands for the three available options:

- MapR File System using the MapR FUSE-Based POSIX Client

-

This example assumes your MapR File System mount point is

/maprand your cluster name ismy.cluster.com:docker run -it ... \ -e ZEPPELIN_CONFIG_FS_DIR=/mapr/my.cluster.com/user/mapruser1/dsrconf ...IMPORTANT You must specify the parameters used by the FUSE-Based POSIX Client to use this option. - MapR File System without the MapR FUSE-Based POSIX Client

-

The following example is equivalent to the previous:

docker run -it ... \ -e ZEPPELIN_CONFIG_FS_DIR=maprfs:///user/mapruser1/dsrconf ... - Local filesystem

-

The following specifies a local filesystem path:

docker run -it ... \ -e ZEPPELIN_CONFIG_FS_DIR=/opt/mapr/dsrconf ...

If all of the following apply, then you must restart all containers to enable the new configuration settings:

ZEPPELIN_CONFIG_FS_DIRis set to a MapR File System path- Multiple containers share the two configuration files

- You make a change in either of the configuration files that requires a container restart

Default Drill JDBC Connection URL

The default Drill JDBC connection URL is

jdbc:drill:drillbit=drillbitnode:31010. Starting with MapR Data Science Refinery 1.3, you can configure this default URL using one of the

following two environment variables:

MAPR_DRILLBITS_HOST

- Description

-

Comma separated list of Drillbit servers and optional port numbers.

Use if you want to connect to Drill through a Drillbit server. If you do not specify port numbers, they default to 31010.

- Sample commands

-

docker run -it ... \ -e MAPR_DRILLBITS_HOSTS=node1.cluster.com,node2.cluster.com ... docker run -it ... \ -e MAPR_DRILLBITS_HOSTS=node1.cluster.com:31010,node2.cluster.com:31010 ... - Resulting URL

-

jdbc:drill:drillbit=node1.cluster.com:31010,node2.cluster.com:31010

MAPR_ZK_QUORUM

- Description

-

Comma separated list of servers and optional port numbers in your Zookeeper cluster.

Use if you want to connect to Drill through a Zookeeper cluster. If you do not specify port numbers, they default to 5181.

- Sample commands

-

docker run -it ... \ -e MAPR_ZK_QUORUM=node1.cluster.com,node2.cluster.com,node3.cluster.com \ ... docker run -it ... \ -e MAPR_ZK_QUORUM=node1.cluster.com:5181,node2.cluster.com:5181,node3.cluster.com:5181 \ ... - Resulting URL

-

jdbc:drill:zk=node1.cluster.com:5181,node2.cluster.com:5181,node3.cluster.com:5181/drill/my.cluster.com-drillbits

You should specify only one of the two environment variables. If you mistakenly specify

both, MAPR_DRILLBITS_HOST takes precedence.

See Start the Drill Shell (SQLLine) for more information about the Drill JDBC connection URL. See Drill JDBC for more information about setting other options in your Drill JDBC connection string for MapR Data Science Refinery.

MapR-Specific Environment Variables

The following table lists all MapR-specific

environment variables you can use in your docker run command. The second

column contains a short description of each variable. The third column provides links to

detailed descriptions, including situations where you need to use each variable.

| Environment Variable | Description | Documentation Link |

|---|---|---|

HOST_IP |

IP address of your Docker host machine | Bridge Networking |

LIVY_RSC_PORT_RANGE |

Port range reserved for the Livy launcher | Bridge Networking Running Multiple Zeppelin Containers on a Single Host |

MAPR_CLUSTER |

Name of your MapR cluster | MapR Clusters |

MAPR_CLDB_HOSTS |

List of CLDB host IPs | MapR Clusters |

MAPR_CONTAINER_GID |

GID of the container user | MapR Ticketing |

MAPR_CONTAINER_GROUP |

Group name of the container user | MapR Ticketing |

MAPR_CONTAINER_PASSWORD |

Password used to log in to the container UI | MapR Ticketing |

MAPR_CONTAINER_UID |

UID of the container user | MapR Ticketing |

MAPR_CONTAINER_USER |

Name of the container user | MapR Ticketing |

MAPR_DRILLBITS_HOSTS |

Comma separated list of Drillbit servers for connecting to Drill | Default Drill JDBC Connection URL |

MAPR_HS_HOST |

IP address of your MapR cluster's HistoryServer | Pig, Livy, and Spark Interpreters |

MAPR_MOUNT_PATH |

Path of the mount point for the MapR File System | FUSE-Based POSIX Client |

MAPR_TICKETFILE_LOCATION |

Location of the MapR ticket file in your container | MapR Ticketing |

MAPR_TZ |

Time zone inside the container | Running the MapR PACC Using Docker |

MAPR_ZK_QUORUM |

Comma separated list of servers in your Zookeeper cluster for connecting to Drill | Default Drill JDBC Connection URL |

PYSPARK_PYTHON |

Location of Python 3 executable on your MapR File System cluster | Python Version |

SPARK_PORT_RANGE |

Port range reserved for the Spark interpreter | Bridge Networking Running Multiple Zeppelin Containers on a Single Host |

ZEPPELIN_ARCHIVE_PYTHON |

Path containing your archive with custom Python packages | Installing Custom Packages for PySpark |

ZEPPELIN_CONFIG_FS_DIR |

Path containing the following Zeppelin configuration files:

|

Configuration Storage |

ZEPPELIN_DEPLOY_MODE |

Set to kubernetes if running Zeppelin as a Kubernetes

service |

Running MapR Data Science Refinery as a Kubernetes Service |

ZEPPELIN_INTERPRETER_LIFECYCLE_MANAGER_TIMEOUT_THRESHOLD |

Timeout threshold that determines when to terminate idle interpreters | Idle Interpreter Timeout Threshold |

ZEPPELIN_NOTEBOOK_DIR |

Location to store your Zeppelin notebooks | Notebook Storage |

ZEPPELIN_SSL_PORT |

Port number for connecting to the Zeppelin UI | Connection Port |