Using Service Verification

The service verification feature provides an easy way to verify that services on all nodes in the cluster are running and functional.

IMPORTANT Service verification is not currently implemented for all services.

Support for additional services will be added in subsequent releases.

For secure or

non-secure clusters, you can run the service verification feature from the Installer user

interface. Service verification is useful after a new installation. For

example, service verification can detect whether all services have successfully joined a

cluster. You can run a service verification any time after the cluster is installed to check

the general health of services on all nodes.Before Using Service Verification

Before you can use the service verification feature:

- The cluster must be installed, and you must have installed it by using the Installer or Installer Stanzas.

- Ensure that the Installer is up to date. Service verification is supported only on Installer versions 1.15.0.0 and later.

- Service verification can only be performed from the Installer node. The Installer node is the node where you run the Installer.

- Running service verification requires

root-user access (or sudoer access toroot) for remote authentication. (When you perform service verification, the Installer node must ssh into each of the cluster nodes.)

Performing Service Verification Using the Installer User Interface

To perform service verification from the Installer user interface:

- On the Installer node, use a browser to navigate to the Installer home page, and log

on as the cluster

admin:

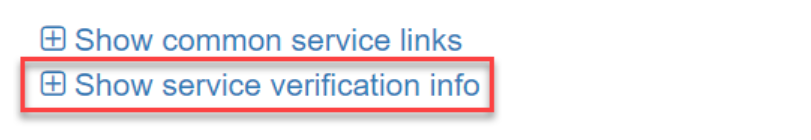

https://<Installer Node hostname/IPaddress>:9443 - Scroll down until you see the following links:

- Click the Show service verification info link to display the list of services and nodes.

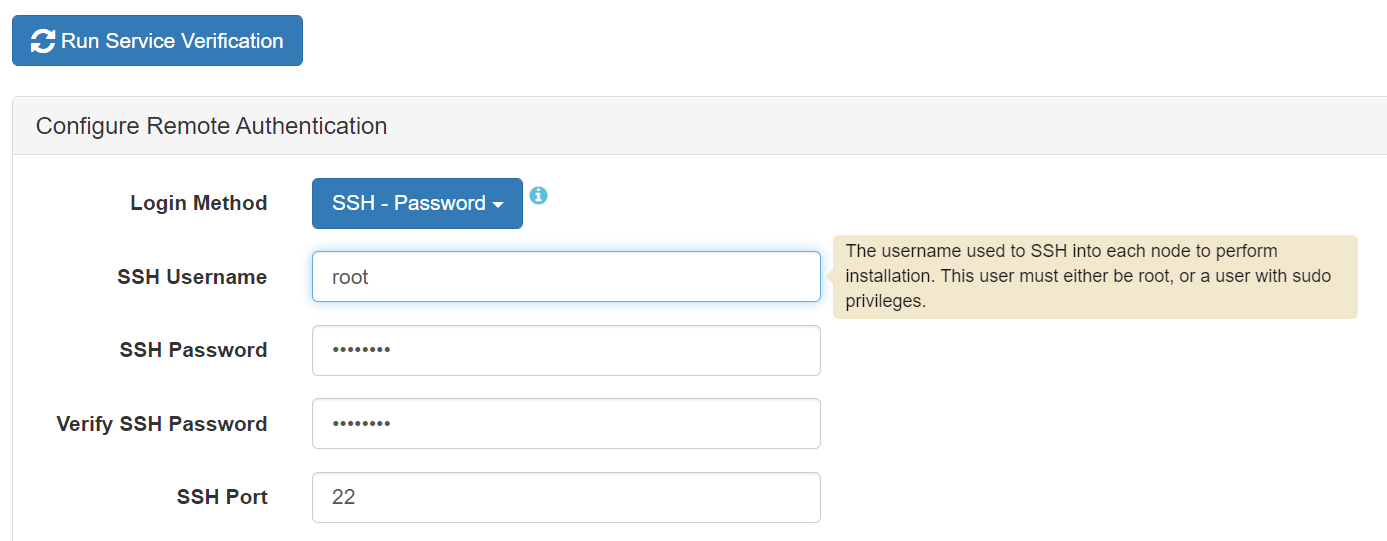

- Click Run Service Verification to start the verification. The

Installer prompts for the

rootuser password:

- Enter the

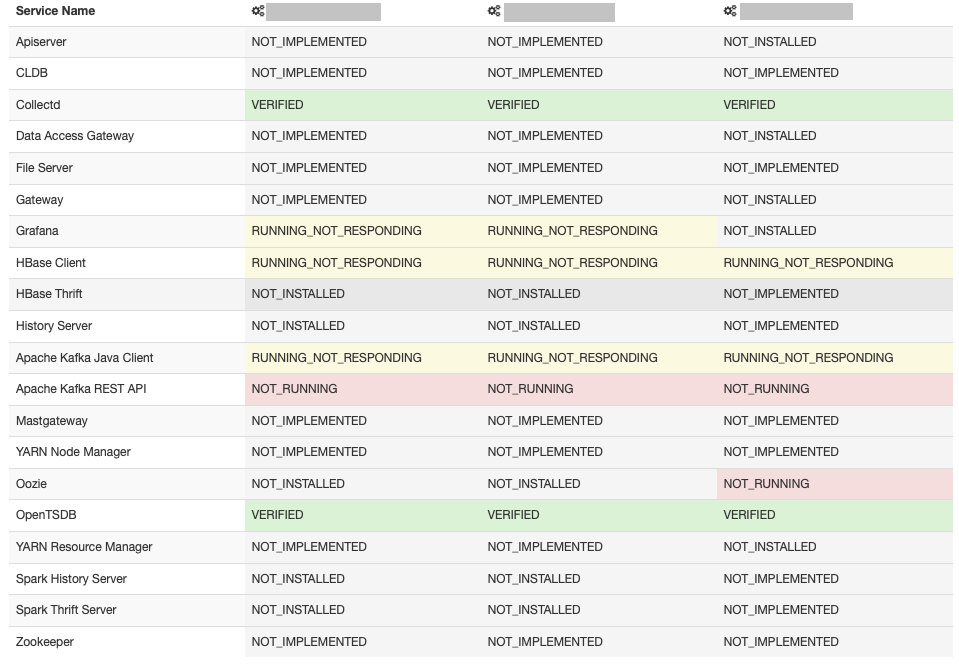

rootuser credentials, and click Run Service Verification. Verification can take anywhere from a few seconds to several minutes, depending on the size of the cluster, the network, and other attributes that affect performance. When the verification activity is complete, the Installer shows a list of services with the verification output for each service. For example: Possible values are:

Possible values are:- FAILED_TO_EXECUTE

- NOT_IMPLEMENTED

- NOT_INSTALLED

- NOT_RUNNING

- NOT_STARTED

- RUNNING_NOT_RESPONDING

- VERIFIED

- To rerun service verification – for example, after running it once and discovering some services are not responding – click Run Service Verification again.

Service Verification Logs

On each node where a service is installed, logged output from the service verification

feature is saved

to:

$MAPR_HOME/<service>/<service>-<version>/var/log/<service>/verify_service.<date>

# pwd

/opt/mapr/opentsdb/opentsdb-2.4.0/var/log/opentsdb

# ls

metrics_tmp ot_purgeData.log-20210414.gz

opentsdb_daemon.log ot_purgeData.log-20210415.gz

opentsdb.err ot_purgeData.log-20210416.gz

opentsdb_install.log ot_purgeData.log-20210417.gz

opentsdb.out ot_purgeData.log-20210418.gz

opentsdb_scandaemon.log ot_purgeData.log-20210419.gz

opentsdb_scandaemon_query.log ot_purgeData.log-20210420.gz

opentsdb_startup.log ot_purgeData.log-20210421.gz

opentsdb_startup.log.1 ot_purgeData.log-20210422.gz

opentsdb_startup.log.2 ot_purgeData.log-20210423.gz

opentsdb_startup.log.3 ot_purgeData.log-20210424.gz

opentsdb_startup.log.4 ot_purgeData.log-20210425.gz

ot_purgeData.log ot_purgeData.log-20210426.gz

ot_purgeData.log-20210404.gz ot_purgeData.log-20210427.gz

ot_purgeData.log-20210405.gz ot_purgeData.log-20210428.gz

ot_purgeData.log-20210406.gz ot_purgeData.log-20210429.gz

ot_purgeData.log-20210407.gz ot_purgeData.log-20210430.gz

ot_purgeData.log-20210408.gz ot_purgeData.log-20210501.gz

ot_purgeData.log-20210409.gz ot_purgeData.log-20210502.gz

ot_purgeData.log-20210410.gz ot_purgeData.log-20210503

ot_purgeData.log-20210411.gz queries.log

ot_purgeData.log-20210412.gz verify_service.20210503_101756

ot_purgeData.log-20210413.gzThe following is an example of the log output for the Open TSDB

service:

# more verify_service.20210503_101756

Starting verifier at Mon May 3 10:17:59 PDT 2021

checking to see if pid 664447 is alive

pid 664447 is alive

checking to see if opentsdb pid 664447 is responsive

opentsdb responded - rc=0, output = [{"metric":"cpu.percent","tags":{"clustername":"markmapr62.mip.storage.hpecorp.net","clusterid":"6923574301854689047"},"a

ggregateTags":["fqdn","cpu_class","cpu_core"],"dps":{"1620062220":7999.999988058591,"1620062220":7999.999968097525,"1620062221":7999.999950431058,"1620062223

":7999.999897014358,"1620062226":7999.999865572759,"1620062227":7999.999838931619,"1620062230":7999.999799699798,"1620062230":7999.999990135091,"1620062231":

7999.999993930984,"1620062233":8000.000005408324,"1620062236":8000.0000165668525,"1620062237":8000.00002602172,"1620062240":8000.000039944967,"1620062240":80

00.000010000002,"1620062241":8000.000013042937,"1620062243":8000.000022243668,"1620062246":8000.000031188815,"1620062247":8000.000038768231,"1620062250":8000

.000049929692,"1620062250":7999.999869915985,"1620062251":7999.999874489055,"1620062253":7999.9998883162625,"1620062256":7999.999901759388,"1620062257":7999.

999913150034,"1620062260":7999.999929923924,"1620062260":8000.000010010003,"1620062261":8000.000009999326,"1620062263":8000.000009967044,"1620062266":6806.88

2651460229,"1620062267":5932.870612714869,"1620062270":4333.55159683651,"1620062270":3833.0523301108274,"1620062271":2733.737487114993,"1620062273":1134.2988

4221571,"1620062276":719.1767068273092}}]Possible return codes for the log output are:

- 0 – running and responding to a simple interaction test

- 1 – not running

- 2 – running but not responding to a simple interaction test

- 3 – not started*