Running Hive Queries in Zeppelin

This section contains samples of Apache Hive queries that you can run in your Apache Zeppelin notebook.

Prerequisites

Procedure

-

Using the shell interpreter, create a source data file:

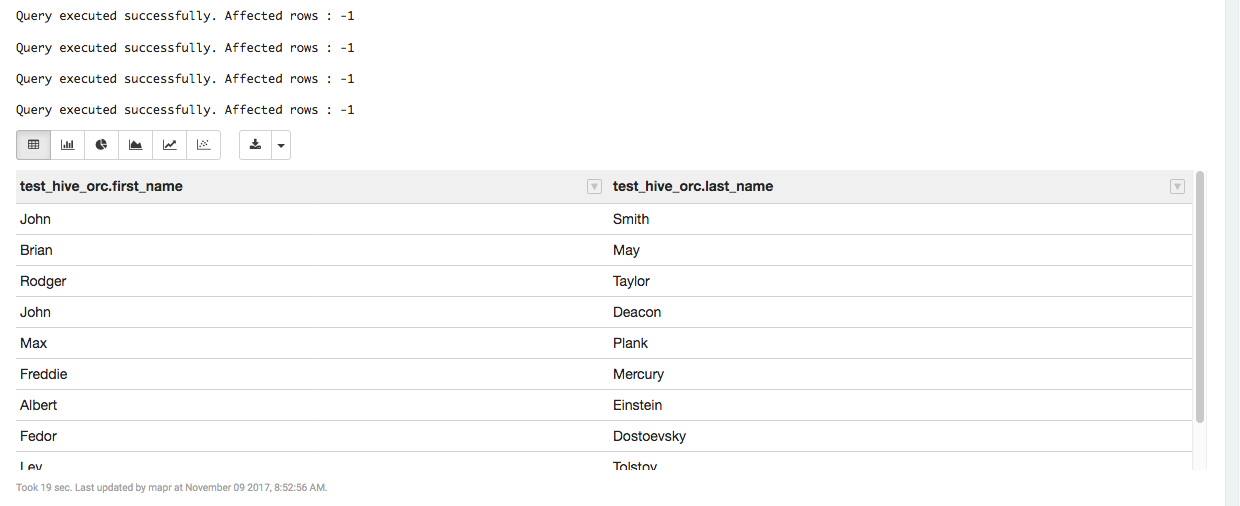

%sh cat > /tmp/test.data << EOF John,Smith Brian,May Rodger,Taylor John,Deacon Max,Plank Freddie,Mercury Albert,Einstein Fedor,Dostoevsky Lev,Tolstoy Niccolo,Paganini EOF -

Copy the file to the MapR File System:

To use POSIX shell commands like

cp, you must have a MapR filesystem mount point in your container. The example below assumes your mount point is/maprand your cluster name ismy.cluster.com:%sh cp /tmp/test.data /mapr/my.cluster.com/user/mapruser1%sh hadoop fs -put /tmp/test.data /user/mapruser1 -

Run the Hive code using the Hive JDBC interpreter:

%hive -- create and load Hive table create table test_hive(first_name string, last_name string) ROW FORMAT DELIMITED FIELDS TERMINATED BY ','; load data inpath '/user/mapruser1/test.data' overwrite into table test_hive; -- create and load Hive ORC table create table test_hive_orc(first_name string, last_name string) stored as orc tblproperties ("orc.compress"="NONE"); insert overwrite table test_hive_orc select * from test_hive; -- query the Hive ORC table select * from test_hive_orc;

The output looks like the following:

-

Drop the Hive tables created in the example:

%hive drop table test_hive; drop table test_hive_orc;