Setting Up CDC

Describes the requirements and how to set up Change Data Capture (CDC).

- MapR Database source table (JSON or binary)

- MapR Event Store For Apache Kafka changelog stream

- MapR Event Store For Apache Kafka stream topic

- MapR Database table changelog relationship between the source table and the destination stream topic

Before Setting Up CDC

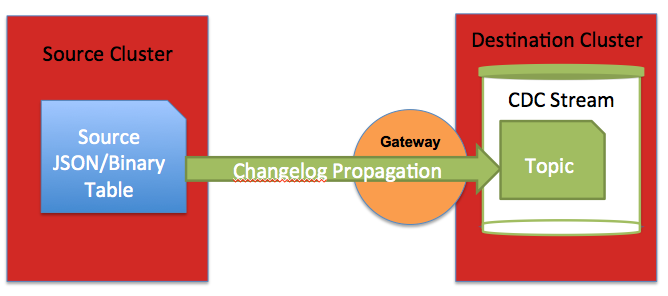

The destination MapR Event Store For Apache Kafka stream can be in the same cluster as the MapR Database source table or it can be on a remote MapR cluster. Regardless of where the stream is located, propagating changed data requires a gateway.

Typically, gateways are setup by installing the gateway on the destination cluster and specifying the gateway node(s) on the source cluster. However, if the stream and the HPE Ezmeral Data Fabric Database source table are in the same cluster, install the gateway on that cluster and specify the gateway nodes(s) in the cluster. See Administering MapR Gateways and Configuring Gateways for Table and Stream Replication.

The following diagram shows a simple CDC data model with one source table to one destination topic on one stream; however, more complex CDC scenarios can be implemented with multiple gateways. See Data Modeling and CDC.

Create Table

- Create and mount a volume for a source table.

- Create a new binary table. The -tabletype parameter's default setting is binary so you don't need to specify this parameter.

- Create a new JSON table.

// Create Volume for table

maprcli volume create -name tableVolume -path /tableVolume

// Create Binary table

maprcli table create -path /tableVolume/cdcTable

// Create JSON table

maprcli table create -path /tableVolume/cdcTable -tabletype json// Create Volume for table

https://10.10.100.17:8443/rest/volume/create?name=tableVolume&path=/tableVolume

// Create Binary table

https://10.10.100.17:8443/rest/table/create?path=/tableVolume/cdcTable

// Create JSON table

https://10.10.100.17:8443/rest/table/create?path=/tableVolume/cdcTable&tabletype=jsonCreate Stream

An MapR Event Store For Apache Kafka changelog stream must be created for the

propagated changed data records using the maprcli stream create

-ischangelog parameter. See maprcli stream create or use the Control System.

- If the

stream topic createcommand is used to create a stream topic, then the number of topic partitions can be set at creation time and then is locked. - If the

table changelog addcommand is used to add a stream topic (as well as establish a relationship between the source table and the changelog stream), then the number of topic partitions is inherited from the changelog stream and is locked.

- Create and mount a volume for a changelog stream.

- Create a changelog stream using the default partitions value of one (1).

- Create a changelog stream changing the default partitions to three (3).

// Create Volume for stream

maprcli volume create -name streamVolume -path /streamVolume

// Create stream (default partitions: 1)

maprcli stream create -path /streamVolume/changelogStream -ischangelog true

// Create stream (default partitions: 3)

maprcli stream create -path /streamVolume/changelogStream -ischangelog true -defaultpartitions 3// Create Volume for stream

https://10.10.100.17:8443/rest/volume/create?name=streamVolume&path=/streamVolume

// Create stream (default partitions: 1)

https://10.10.100.17:8443/rest/stream/create?path=/streamVolume/changelogStream&ischangelog=true

// Create stream (default partitions: 3)

https://10.10.100.17:8443/rest/stream/create?path=/streamVolume/changelogStream&ischangelog=true&defaultpartitions=3Create Topic

An MapR Event Store For Apache Kafka stream topic must be created for the changed data records. This can be accomplished in a variety of ways:- Use the maprcli table changelog add command. This command establishes a changelog relationsip between the source table and the destination stream topic.

- Use the maprcli stream topic create command. I

- Use the REST equivalent of the above maprcli commands.

- Use the Control System.

maprcli table changelog add command is used to establish the changelog

relationship.) The stream topic edit command can not be used to modify the

topic's number of partitions.- If the changelog stream's default partitions are acceptable for the stream topic

(because the topic inherits the stream's default partitions), you can either:

- Go directly to adding the changelog relationship with the

maprcli table changelog addcommand and create the topic there. - Create the topic with the

stream topic createcommand and not specify the-partitionsparameter.

- Go directly to adding the changelog relationship with the

- If you want to change the topic's partitions, create the topic with the

stream topic createcommand and set the-partitionsparameter. - If you use the Control System, either of the above methods are available.

The following code examples show how to create a stream topic and change the default partition to five (5).

// Create topic (default partitions: 5

maprcli stream topic create -path /streamVolume/changelogStream -topic cdcTopic1 -partitions 5// Create topic (default partitions: 5

https://10.10.100.17:8443/rest/stream/topic/create?path=/streamVolume/changelogStream&topic=cdcTopic1&partitions=5Add Changelog

A table changelog relationship must be added between the source table and the destination stream topic by using the maprcli table changelog add command or the Control System. By adding a table changelog relationship, you are creating an environment that propagates changed data records from a source table to an MapR Event Store For Apache Kafka stream topic.

- If you are creating a changelog relationship and the stream topic does not exist, specify the stream path and topic.

- If you are creating a changelog relationship and the stream topic does exist,

specify the stream path and topic AND the

-useexistingtopicparameter. The-useexistingtopicparameter can only be used with a changelog stream's newly created topic or a previous changelog stream topic for the same source table.

-propagateexistingdata

parameter to false. The default is true.-pause parameter to

true. The change data records are stored in a bucket until you resume the changelog

relationship; at this point, the stored change data records are propagated to the stream

topic. See table changelog resume for more

information.- Create a changelog relationship between the source table and the destination stream topic, where the stream topic does not exist.

- Create a changelog relationship between the source table and the destination stream topic, where the stream topic does exist.

/mapr/<remote-cluster>/path/to/stream:topic. maprcli table changelog add -path /tableVolume/cdcTable -changelog /streamVolume/changelogStream:cdcTopic1

maprcli table changelog add -path /tableVolume/cdcTable -changelog /streamVolume/changelogStream:cdcTopic1 -useexistingtopic truehttps://10.10.100.17:8443/rest/table/changelog/add?path=/tableVolume/cdcTable&changelog=/streamVolume/changelogStream:cdcTopic1

https://10.10.100.17:8443/rest/table/changelog/add?path=/tableVolume/cdcTable&changelog=/streamVolume/changelogStream:cdcTopic1&useexistingtopic=trueThe following example verifies that the table changelog relationship exists:

maprcli table changelog list -path /tableVolume/cdcTableWhat's Next: Modifying and Consuming Data

To have CDC changed data records to consume, you need to perform inserts, updates, and deletes on the MapR Database table data. See CRUD operations on documents using mapr dbshell for JSON documents, mapr hbshell for binary data, Java applications for MapR Database JSON, C or Java applications for MapR Database Binary.

An MapR Event Store For Apache Kafka Kafka/OJAI consumer application subscribes to the topic and consumes the change data records. See Consuming CDC Records for more information.